UPDATE – CrashPlan For Home (green branding) was retired by Code 42 Software on 22/08/2017. See migration notes below to find out how to transfer to CrashPlan for Small Business on Synology at the special discounted rate.

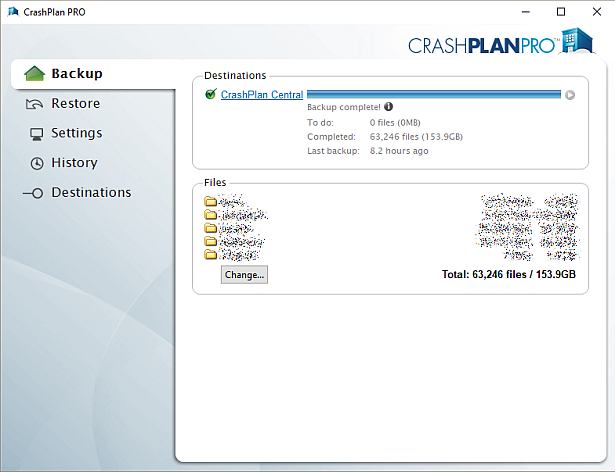

CrashPlan is a popular online backup solution which supports continuous syncing. With this your NAS can become even more resilient, particularly against the threat of ransomware.

There are now only two product versions:

- Small Business: CrashPlan PRO (blue branding). Unlimited cloud backup subscription, $10 per device per month. Reporting via Admin Console. No peer-to-peer backups

- Enterprise: CrashPlan PROe (black branding). Cloud backup subscription typically billed by storage usage, also available from third parties.

The instructions and notes on this page apply to both versions of the Synology package.

CrashPlan is a Java application which can be difficult to install on a NAS. Way back in January 2012 I decided to simplify it into a Synology package, since I had already created several others. It has been through many versions since that time, as the changelog below shows. Although it used to work on Synology products with ARM and PowerPC CPUs, it unfortunately became Intel-only in October 2016 due to Code 42 Software adding a reliance on some proprietary libraries.

Licence compliance is another challenge – Code 42’s EULA prohibits redistribution. I had to make the Synology package use the regular CrashPlan for Linux download (after the end user agrees to the Code 42 EULA). I then had to write my own script to extract this archive and mimic the Code 42 installer behaviour, but without the interactive prompts of the original.

Synology Package Installation

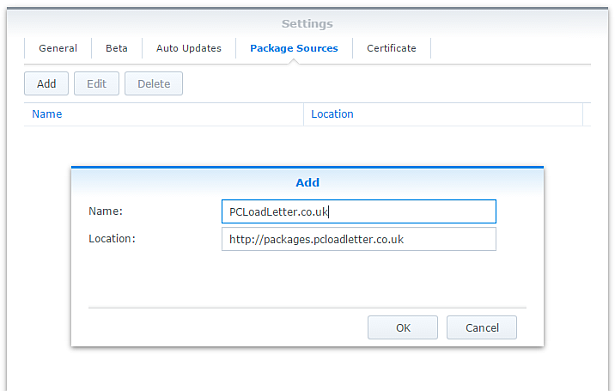

- In Synology DSM’s Package Center, click Settings and add my package repository:

- The repository will push its certificate automatically to the NAS, which is used to validate package integrity. Set the Trust Level to Synology Inc. and trusted publishers:

- Now browse the Community section in Package Center to install CrashPlan:

The repository only displays packages which are compatible with your specific model of NAS. If you don’t see CrashPlan in the list, then either your NAS model or your DSM version are not supported at this time. DSM 5.0 is the minimum supported version for this package, and an Intel CPU is required. - Since CrashPlan is a Java application, it needs a Java Runtime Environment (JRE) to function. It is recommended that you select to have the package install a dedicated Java 8 runtime. For licensing reasons I cannot include Java with this package, so you will need to agree to the licence terms and download it yourself from Oracle’s website. The package expects to find this .tar.gz file in a shared folder called ‘public’. If you go ahead and try to install the package without it, the error message will indicate precisely which Java file you need for your system type, and it will provide a TinyURL link to the appropriate Oracle download page.

- To install CrashPlan PRO you will first need to log into the Admin Console and download the Linux App from the App Download section and also place this in the ‘public’ shared folder on your NAS.

- If you have a multi-bay NAS, use the Shared Folder control panel to create the shared folder called public (it must be all lower case). On single bay models this is created by default. Assign it with Read/Write privileges for everyone.

- If you have trouble getting the Java or CrashPlan PRO app files recognised by this package, try downloading them with Firefox. It seems to be the only web browser that doesn’t try to uncompress the files, or rename them without warning. I also suggest that you leave the Java file and the public folder present once you have installed the package, so that you won’t need to fetch this again to install future updates to the CrashPlan package.

- CrashPlan is installed in headless mode – backup engine only. This will configured by a desktop client, but operates independently of it.

- The first time you start the CrashPlan package you will need to stop it and restart it before you can connect the client. This is because a config file that is only created on first run needs to be edited by one of my scripts. The engine is then configured to listen on all interfaces on the default port 4243.

CrashPlan Client Installation

- Once the CrashPlan engine is running on the NAS, you can manage it by installing CrashPlan on another computer, and by configuring it to connect to the NAS instance of the CrashPlan Engine.

- Make sure that you install the version of the CrashPlan client that matches the version running on the NAS. If the NAS version gets upgraded later, you will need to update your client computer too.

- The Linux CrashPlan PRO client must be downloaded from the Admin Console and placed in the ‘public’ folder on your NAS in order to successfully install the Synology package.

- By default the client is configured to connect to the CrashPlan engine running on the local computer. Run this command on your NAS from an SSH session:

echo `cat /var/lib/crashplan/.ui_info`

Note those are backticks not quotes. This will give you a port number (4243), followed by an authentication token, followed by the IP binding (0.0.0.0 means the server is listening for connections on all interfaces) e.g.:

4243,9ac9b642-ba26-4578-b705-124c6efc920b,0.0.0.0

port,--------------token-----------------,binding

Copy this token value and use this value to replace the token in the equivalent config file on the computer that you would like to run the CrashPlan client on – located here:

C:\ProgramData\CrashPlan\.ui_info (Windows)

“/Library/Application Support/CrashPlan/.ui_info” (Mac OS X installed for all users)

“~/Library/Application Support/CrashPlan/.ui_info” (Mac OS X installed for single user)

/var/lib/crashplan/.ui_info (Linux)

You will not be able to connect the client unless the client token matches on the NAS token. On the client you also need to amend the IP address value after the token to match the Synology NAS IP address.

so using the example above, your computer’s CrashPlan client config file would be edited to:

4243,9ac9b642-ba26-4578-b705-124c6efc920b,192.168.1.100

assuming that the Synology NAS has the IP 192.168.1.100

If it still won’t connect, check that the ServicePort value is set to 4243 in the following files:

C:\ProgramData\CrashPlan\conf\ui_(username).properties (Windows)

“/Library/Application Support/CrashPlan/ui.properties” (Mac OS X installed for all users)

“~/Library/Application Support/CrashPlan/ui.properties” (Mac OS X installed for single user)

/usr/local/crashplan/conf (Linux)

/var/lib/crashplan/.ui_info (Synology) – this value does change spontaneously if there’s a port conflict e.g. you started two versions of the package concurrently (CrashPlan and CrashPlan PRO) - As a result of the nightmarish complexity of recent product changes Code42 has now published a support article with more detail on running headless systems including config file locations on all supported operating systems, and for ‘all users’ versus single user installs etc.

- You should disable the CrashPlan service on your computer if you intend only to use the client. In Windows, open the Services section in Computer Management and stop the CrashPlan Backup Service. In the service Properties set the Startup Type to Manual. You can also disable the CrashPlan System Tray notification application by removing it from Task Manager > More Details > Start-up Tab (Windows 8/Windows 10) or the All Users Startup Start Menu folder (Windows 7).

To accomplish the same on Mac OS X, run the following commands one by one:sudo launchctl unload /Library/LaunchDaemons/com.crashplan.engine.plist sudo mv /Library/LaunchDaemons/com.crashplan.engine.plist /Library/LaunchDaemons/com.crashplan.engine.plist.bak

The CrashPlan menu bar application can be disabled in System Preferences > Users & Groups > Current User > Login Items

Migration from CrashPlan For Home to CrashPlan For Small Business (CrashPlan PRO)

- Leave the regular green branded CrashPlan 4.8.3 Synology package installed.

- Go through the online migration using the link in the email notification you received from Code 42 on 22/08/2017. This seems to trigger the CrashPlan client to begin an update to 4.9 which will fail. It will also migrate your account onto a CrashPlan PRO server. The web page is likely to stall on the Migrating step, but no matter. The process is meant to take you to the store but it seems to be quite flakey. If you see the store page with a $0.00 amount in the basket, this has correctly referred you for the introductory offer. Apparently the $9.99 price thereafter shown on that screen is a mistake and the correct price of $2.50 is shown on a later screen in the process I think. Enter your credit card details and check out if you can. If not, continue.

- Log into the CrashPlan PRO Admin Console as per these instructions, and download the CrashPlan PRO 4.9 client for Linux, and the 4.9 client for your remote console computer. Ignore the red message in the bottom left of the Admin Console about registering, and do not sign up for the free trial. Preferably use Firefox for the Linux version download – most of the other web browsers will try to unpack the .tgz archive, which you do not want to happen.

- Configure the CrashPlan PRO 4.9 client on your computer to connect to your Syno as per the usual instructions on this blog post.

- Put the downloaded Linux CrashPlan PRO 4.9 client .tgz file in the ‘public’ shared folder on your NAS. The package will no longer download this automatically as it did in previous versions.

- From the Community section of DSM Package Center, install the CrashPlan PRO 4.9 package concurrently with your existing CrashPlan 4.8.3 Syno package.

- This will stop the CrashPlan package and automatically import its configuration. Notice that it will also backup your old CrashPlan .identity file and leave it in the ‘public’ shared folder, just in case something goes wrong.

- Start the CrashPlan PRO Synology package, and connect your CrashPlan PRO console from your computer.

- You should see your protected folders as usual. At first mine reported something like “insufficient device licences”, but the next time I started up it changed to “subscription expired”.

- Uninstall the CrashPlan 4.8.3 Synology package, this is no longer required.

- At this point if the store referral didn’t work in the second step, you need to sign into the Admin Console. While signed in, navigate to this link which I was given by Code 42 support. If it works, you should see a store page with some blue font text and a $0.00 basket value. If it didn’t work you will get bounced to the Consumer Next Steps webpage: “Important Changes to CrashPlan for Home” – the one with the video of the CEO explaining the situation. I had to do this a few times before it worked. Once the store referral link worked and I had confirmed my payment details my CrashPlan PRO client immediately started working. Enjoy!

Notes

- The package uses the intact CrashPlan installer directly from Code 42 Software, following acceptance of its EULA. I am complying with the directive that no one redistributes it.

- The engine daemon script checks the amount of system RAM and scales the Java heap size appropriately (up to the default maximum of 512MB). This can be overridden in a persistent way if you are backing up large backup sets by editing /var/packages/CrashPlan/target/syno_package.vars. If you are considering buying a NAS purely to use CrashPlan and intend to back up more than a few hundred GB then I strongly advise buying one of the models with upgradeable RAM. Memory is very limited on the cheaper models. I have found that a 512MB heap was insufficient to back up more than 2TB of files on a Windows server and that was the situation many years ago. It kept restarting the backup engine every few minutes until I increased the heap to 1024MB. Many users of the package have found that they have to increase the heap size or CrashPlan will halt its activity. This can be mitigated by dividing your backup into several smaller backup sets which are scheduled to be protected at different times. Note that from package version 0041, using the dedicated JRE on a 64bit Intel NAS will allow a heap size greater than 4GB since the JRE is 64bit (requires DSM 6.0 in most cases).

- If you need to manage CrashPlan from a remote location, I suggest you do so using SSH tunnelling as per this support document.

- The package supports upgrading to future versions while preserving the machine identity, logs, login details, and cache. Upgrades can now take place without requiring a login from the client afterwards.

- If you remove the package completely and re-install it later, you can re-attach to previous backups. When you log in to the Desktop Client with your existing account after a re-install, you can select “adopt computer” to merge the records, and preserve your existing backups. I haven’t tested whether this also re-attaches links to friends’ CrashPlan computers and backup sets, though the latter does seem possible in the Friends section of the GUI. It’s probably a good idea to test that this survives a package reinstall before you start relying on it. Sometimes, particularly with CrashPlan PRO I think, the adopt option is not offered. In this case you can log into CrashPlan Central and retrieve your computer’s GUID. On the CrashPlan client, double-click on the logo in the top right and you’ll enter a command line mode. You can use the GUID command to change the system’s GUID to the one you just retrieved from your account.

- The log which is displayed in the package’s Log tab is actually the activity history. If you are trying to troubleshoot an issue you will need to use an SSH session to inspect these log files:

/var/packages/CrashPlan/target/log/engine_output.log

/var/packages/CrashPlan/target/log/engine_error.log

/var/packages/CrashPlan/target/log/app.log - When CrashPlan downloads and attempts to run an automatic update, the script will most likely fail and stop the package. This is typically caused by syntax differences with the Synology versions of certain Linux shell commands (like rm, mv, or ps). The startup script will attempt to apply the published upgrade the next time the package is started.

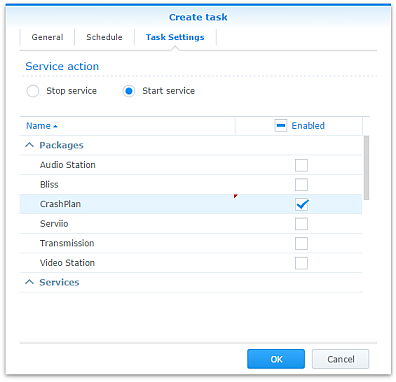

- Although CrashPlan’s activity can be scheduled within the application, in order to save RAM some users may wish to restrict running the CrashPlan engine to specific times of day using the Task Scheduler in DSM Control Panel:

Note that regardless of real-time backup, by default CrashPlan will scan the whole backup selection for changes at 3:00am. Include this time within your Task Scheduler time window or else CrashPlan will not capture file changes which occurred while it was inactive:

- If you decide to sign up for one of CrashPlan’s paid backup services as a result of my work on this, please consider donating using the PayPal button on the right of this page.

Package scripts

For information, here are the package scripts so you can see what it’s going to do. You can get more information about how packages work by reading the Synology 3rd Party Developer Guide.

installer.sh

#!/bin/sh

#--------CRASHPLAN installer script

#--------package maintained at pcloadletter.co.uk

DOWNLOAD_PATH="http://download2.code42.com/installs/linux/install/${SYNOPKG_PKGNAME}"

CP_EXTRACTED_FOLDER="crashplan-install"

OLD_JNA_NEEDED="false"

[ "${SYNOPKG_PKGNAME}" == "CrashPlan" ] && DOWNLOAD_FILE="CrashPlan_4.8.3_Linux.tgz"

[ "${SYNOPKG_PKGNAME}" == "CrashPlanPRO" ] && DOWNLOAD_FILE="CrashPlanPRO_4.*_Linux.tgz"

if [ "${SYNOPKG_PKGNAME}" == "CrashPlanPROe" ]; then

CP_EXTRACTED_FOLDER="${SYNOPKG_PKGNAME}-install"

OLD_JNA_NEEDED="true"

[ "${WIZARD_VER_483}" == "true" ] && { CPPROE_VER="4.8.3"; CP_EXTRACTED_FOLDER="crashplan-install"; OLD_JNA_NEEDED="false"; }

[ "${WIZARD_VER_480}" == "true" ] && { CPPROE_VER="4.8.0"; CP_EXTRACTED_FOLDER="crashplan-install"; OLD_JNA_NEEDED="false"; }

[ "${WIZARD_VER_470}" == "true" ] && { CPPROE_VER="4.7.0"; CP_EXTRACTED_FOLDER="crashplan-install"; OLD_JNA_NEEDED="false"; }

[ "${WIZARD_VER_460}" == "true" ] && { CPPROE_VER="4.6.0"; CP_EXTRACTED_FOLDER="crashplan-install"; OLD_JNA_NEEDED="false"; }

[ "${WIZARD_VER_452}" == "true" ] && { CPPROE_VER="4.5.2"; CP_EXTRACTED_FOLDER="crashplan-install"; OLD_JNA_NEEDED="false"; }

[ "${WIZARD_VER_450}" == "true" ] && { CPPROE_VER="4.5.0"; CP_EXTRACTED_FOLDER="crashplan-install"; OLD_JNA_NEEDED="false"; }

[ "${WIZARD_VER_441}" == "true" ] && { CPPROE_VER="4.4.1"; CP_EXTRACTED_FOLDER="crashplan-install"; OLD_JNA_NEEDED="false"; }

[ "${WIZARD_VER_430}" == "true" ] && CPPROE_VER="4.3.0"

[ "${WIZARD_VER_420}" == "true" ] && CPPROE_VER="4.2.0"

[ "${WIZARD_VER_370}" == "true" ] && CPPROE_VER="3.7.0"

[ "${WIZARD_VER_364}" == "true" ] && CPPROE_VER="3.6.4"

[ "${WIZARD_VER_363}" == "true" ] && CPPROE_VER="3.6.3"

[ "${WIZARD_VER_3614}" == "true" ] && CPPROE_VER="3.6.1.4"

[ "${WIZARD_VER_353}" == "true" ] && CPPROE_VER="3.5.3"

[ "${WIZARD_VER_341}" == "true" ] && CPPROE_VER="3.4.1"

[ "${WIZARD_VER_33}" == "true" ] && CPPROE_VER="3.3"

DOWNLOAD_FILE="CrashPlanPROe_${CPPROE_VER}_Linux.tgz"

fi

DOWNLOAD_URL="${DOWNLOAD_PATH}/${DOWNLOAD_FILE}"

CPI_FILE="${SYNOPKG_PKGNAME}_*.cpi"

OPTDIR="${SYNOPKG_PKGDEST}"

VARS_FILE="${OPTDIR}/install.vars"

SYNO_CPU_ARCH="`uname -m`"

[ "${SYNO_CPU_ARCH}" == "x86_64" ] && SYNO_CPU_ARCH="i686"

[ "${SYNO_CPU_ARCH}" == "armv5tel" ] && SYNO_CPU_ARCH="armel"

[ "${SYNOPKG_DSM_ARCH}" == "armada375" ] && SYNO_CPU_ARCH="armv7l"

[ "${SYNOPKG_DSM_ARCH}" == "armada38x" ] && SYNO_CPU_ARCH="armhf"

[ "${SYNOPKG_DSM_ARCH}" == "comcerto2k" ] && SYNO_CPU_ARCH="armhf"

[ "${SYNOPKG_DSM_ARCH}" == "alpine" ] && SYNO_CPU_ARCH="armhf"

[ "${SYNOPKG_DSM_ARCH}" == "alpine4k" ] && SYNO_CPU_ARCH="armhf"

[ "${SYNOPKG_DSM_ARCH}" == "monaco" ] && SYNO_CPU_ARCH="armhf"

[ "${SYNOPKG_DSM_ARCH}" == "rtd1296" ] && SYNO_CPU_ARCH="armhf"

NATIVE_BINS_URL="http://packages.pcloadletter.co.uk/downloads/crashplan-native-${SYNO_CPU_ARCH}.tar.xz"

NATIVE_BINS_FILE="`echo ${NATIVE_BINS_URL} | sed -r "s%^.*/(.*)%\1%"`"

OLD_JNA_URL="http://packages.pcloadletter.co.uk/downloads/crashplan-native-old-${SYNO_CPU_ARCH}.tar.xz"

OLD_JNA_FILE="`echo ${OLD_JNA_URL} | sed -r "s%^.*/(.*)%\1%"`"

INSTALL_FILES="${DOWNLOAD_URL} ${NATIVE_BINS_URL}"

[ "${OLD_JNA_NEEDED}" == "true" ] && INSTALL_FILES="${INSTALL_FILES} ${OLD_JNA_URL}"

TEMP_FOLDER="`find / -maxdepth 2 -path '/volume?/@tmp' | head -n 1`"

#the Manifest folder is where friends' backup data is stored

#we set it outside the app folder so it persists after a package uninstall

MANIFEST_FOLDER="/`echo $TEMP_FOLDER | cut -f2 -d'/'`/crashplan"

LOG_FILE="${SYNOPKG_PKGDEST}/log/history.log.0"

UPGRADE_FILES="syno_package.vars conf/my.service.xml conf/service.login conf/service.model"

UPGRADE_FOLDERS="log cache"

PUBLIC_FOLDER="`synoshare --get public | sed -r "/Path/!d;s/^.*\[(.*)\].*$/\1/"`"

#dedicated JRE section

if [ "${WIZARD_JRE_CP}" == "true" ]; then

DOWNLOAD_URL="http://tinyurl.com/javaembed"

EXTRACTED_FOLDER="ejdk1.8.0_151"

#detect systems capable of running 64bit JRE which can address more than 4GB of RAM

[ "${SYNOPKG_DSM_ARCH}" == "x64" ] && SYNO_CPU_ARCH="x64"

[ "`uname -m`" == "x86_64" ] && [ ${SYNOPKG_DSM_VERSION_MAJOR} -ge 6 ] && SYNO_CPU_ARCH="x64"

if [ "${SYNO_CPU_ARCH}" == "armel" ]; then

JAVA_BINARY="ejdk-8u151-linux-arm-sflt.tar.gz"

JAVA_BUILD="ARMv5/ARMv6/ARMv7 Linux - SoftFP ABI, Little Endian 2"

elif [ "${SYNO_CPU_ARCH}" == "armv7l" ]; then

JAVA_BINARY="ejdk-8u151-linux-arm-sflt.tar.gz"

JAVA_BUILD="ARMv5/ARMv6/ARMv7 Linux - SoftFP ABI, Little Endian 2"

elif [ "${SYNO_CPU_ARCH}" == "armhf" ]; then

JAVA_BINARY="ejdk-8u151-linux-armv6-vfp-hflt.tar.gz"

JAVA_BUILD="ARMv6/ARMv7 Linux - VFP, HardFP ABI, Little Endian 1"

elif [ "${SYNO_CPU_ARCH}" == "ppc" ]; then

#Oracle have discontinued Java 8 for PowerPC after update 6

JAVA_BINARY="ejdk-8u6-fcs-b23-linux-ppc-e500v2-12_jun_2014.tar.gz"

JAVA_BUILD="Power Architecture Linux - Headless - e500v2 with double-precision SPE Floating Point Unit"

EXTRACTED_FOLDER="ejdk1.8.0_06"

DOWNLOAD_URL="http://tinyurl.com/java8ppc"

elif [ "${SYNO_CPU_ARCH}" == "i686" ]; then

JAVA_BINARY="ejdk-8u151-linux-i586.tar.gz"

JAVA_BUILD="x86 Linux Small Footprint - Headless"

elif [ "${SYNO_CPU_ARCH}" == "x64" ]; then

JAVA_BINARY="jre-8u151-linux-x64.tar.gz"

JAVA_BUILD="Linux x64"

EXTRACTED_FOLDER="jre1.8.0_151"

DOWNLOAD_URL="http://tinyurl.com/java8x64"

fi

fi

JAVA_BINARY=`echo ${JAVA_BINARY} | cut -f1 -d'.'`

source /etc/profile

pre_checks ()

{

#These checks are called from preinst and from preupgrade functions to prevent failures resulting in a partially upgraded package

if [ "${WIZARD_JRE_CP}" == "true" ]; then

synoshare -get public > /dev/null || (

echo "A shared folder called 'public' could not be found - note this name is case-sensitive. " >> $SYNOPKG_TEMP_LOGFILE

echo "Please create this using the Shared Folder DSM Control Panel and try again." >> $SYNOPKG_TEMP_LOGFILE

exit 1

)

JAVA_BINARY_FOUND=

[ -f ${PUBLIC_FOLDER}/${JAVA_BINARY}.tar.gz ] && JAVA_BINARY_FOUND=true

[ -f ${PUBLIC_FOLDER}/${JAVA_BINARY}.tar ] && JAVA_BINARY_FOUND=true

[ -f ${PUBLIC_FOLDER}/${JAVA_BINARY}.tar.tar ] && JAVA_BINARY_FOUND=true

[ -f ${PUBLIC_FOLDER}/${JAVA_BINARY}.gz ] && JAVA_BINARY_FOUND=true

if [ -z ${JAVA_BINARY_FOUND} ]; then

echo "Java binary bundle not found. " >> $SYNOPKG_TEMP_LOGFILE

echo "I was expecting the file ${PUBLIC_FOLDER}/${JAVA_BINARY}.tar.gz. " >> $SYNOPKG_TEMP_LOGFILE

echo "Please agree to the Oracle licence at ${DOWNLOAD_URL}, then download the '${JAVA_BUILD}' package" >> $SYNOPKG_TEMP_LOGFILE

echo "and place it in the 'public' shared folder on your NAS. This download cannot be automated even if " >> $SYNOPKG_TEMP_LOGFILE

echo "displaying a package EULA could potentially cover the legal aspect, because files hosted on Oracle's " >> $SYNOPKG_TEMP_LOGFILE

echo "server are protected by a session cookie requiring a JavaScript enabled browser." >> $SYNOPKG_TEMP_LOGFILE

exit 1

fi

else

if [ -z ${JAVA_HOME} ]; then

echo "Java is not installed or not properly configured. JAVA_HOME is not defined. " >> $SYNOPKG_TEMP_LOGFILE

echo "Download and install the Java Synology package from http://wp.me/pVshC-z5" >> $SYNOPKG_TEMP_LOGFILE

exit 1

fi

if [ ! -f ${JAVA_HOME}/bin/java ]; then

echo "Java is not installed or not properly configured. The Java binary could not be located. " >> $SYNOPKG_TEMP_LOGFILE

echo "Download and install the Java Synology package from http://wp.me/pVshC-z5" >> $SYNOPKG_TEMP_LOGFILE

exit 1

fi

if [ "${WIZARD_JRE_SYS}" == "true" ]; then

JAVA_VER=`java -version 2>&1 | sed -r "/^.* version/!d;s/^.* version \"[0-9]\.([0-9]).*$/\1/"`

if [ ${JAVA_VER} -lt 8 ]; then

echo "This version of CrashPlan requires Java 8 or newer. Please update your Java package. "

exit 1

fi

fi

fi

}

preinst ()

{

pre_checks

cd ${TEMP_FOLDER}

for WGET_URL in ${INSTALL_FILES}

do

WGET_FILENAME="`echo ${WGET_URL} | sed -r "s%^.*/(.*)%\1%"`"

[ -f ${TEMP_FOLDER}/${WGET_FILENAME} ] && rm ${TEMP_FOLDER}/${WGET_FILENAME}

wget ${WGET_URL}

if [[ $? != 0 ]]; then

if [ -d ${PUBLIC_FOLDER} ] && [ -f ${PUBLIC_FOLDER}/${WGET_FILENAME} ]; then

cp ${PUBLIC_FOLDER}/${WGET_FILENAME} ${TEMP_FOLDER}

else

echo "There was a problem downloading ${WGET_FILENAME} from the official download link, " >> $SYNOPKG_TEMP_LOGFILE

echo "which was \"${WGET_URL}\" " >> $SYNOPKG_TEMP_LOGFILE

echo "Alternatively, you may download this file manually and place it in the 'public' shared folder. " >> $SYNOPKG_TEMP_LOGFILE

exit 1

fi

fi

done

exit 0

}

postinst ()

{

if [ "${WIZARD_JRE_CP}" == "true" ]; then

#extract Java (Web browsers love to interfere with .tar.gz files)

cd ${PUBLIC_FOLDER}

if [ -f ${JAVA_BINARY}.tar.gz ]; then

#Firefox seems to be the only browser that leaves it alone

tar xzf ${JAVA_BINARY}.tar.gz

elif [ -f ${JAVA_BINARY}.gz ]; then

#Chrome

tar xzf ${JAVA_BINARY}.gz

elif [ -f ${JAVA_BINARY}.tar ]; then

#Safari

tar xf ${JAVA_BINARY}.tar

elif [ -f ${JAVA_BINARY}.tar.tar ]; then

#Internet Explorer

tar xzf ${JAVA_BINARY}.tar.tar

fi

mv ${EXTRACTED_FOLDER} ${SYNOPKG_PKGDEST}/jre-syno

JRE_PATH="`find ${OPTDIR}/jre-syno/ -name jre`"

[ -z ${JRE_PATH} ] && JRE_PATH=${OPTDIR}/jre-syno

#change owner of folder tree

chown -R root:root ${SYNOPKG_PKGDEST}

fi

#extract CPU-specific additional binaries

mkdir ${SYNOPKG_PKGDEST}/bin

cd ${SYNOPKG_PKGDEST}/bin

tar xJf ${TEMP_FOLDER}/${NATIVE_BINS_FILE} && rm ${TEMP_FOLDER}/${NATIVE_BINS_FILE}

[ "${OLD_JNA_NEEDED}" == "true" ] && tar xJf ${TEMP_FOLDER}/${OLD_JNA_FILE} && rm ${TEMP_FOLDER}/${OLD_JNA_FILE}

#extract main archive

cd ${TEMP_FOLDER}

tar xzf ${TEMP_FOLDER}/${DOWNLOAD_FILE} && rm ${TEMP_FOLDER}/${DOWNLOAD_FILE}

#extract cpio archive

cd ${SYNOPKG_PKGDEST}

cat "${TEMP_FOLDER}/${CP_EXTRACTED_FOLDER}"/${CPI_FILE} | gzip -d -c - | ${SYNOPKG_PKGDEST}/bin/cpio -i --no-preserve-owner

echo "#uncomment to expand Java max heap size beyond prescribed value (will survive upgrades)" > ${SYNOPKG_PKGDEST}/syno_package.vars

echo "#you probably only want more than the recommended 1024M if you're backing up extremely large volumes of files" >> ${SYNOPKG_PKGDEST}/syno_package.vars

echo "#USR_MAX_HEAP=1024M" >> ${SYNOPKG_PKGDEST}/syno_package.vars

echo >> ${SYNOPKG_PKGDEST}/syno_package.vars

cp ${TEMP_FOLDER}/${CP_EXTRACTED_FOLDER}/scripts/CrashPlanEngine ${OPTDIR}/bin

cp ${TEMP_FOLDER}/${CP_EXTRACTED_FOLDER}/scripts/run.conf ${OPTDIR}/bin

mkdir -p ${MANIFEST_FOLDER}/backupArchives

#save install variables which Crashplan expects its own installer script to create

echo TARGETDIR=${SYNOPKG_PKGDEST} > ${VARS_FILE}

echo BINSDIR=/bin >> ${VARS_FILE}

echo MANIFESTDIR=${MANIFEST_FOLDER}/backupArchives >> ${VARS_FILE}

#leave these ones out which should help upgrades from Code42 to work (based on examining an upgrade script)

#echo INITDIR=/etc/init.d >> ${VARS_FILE}

#echo RUNLVLDIR=/usr/syno/etc/rc.d >> ${VARS_FILE}

echo INSTALLDATE=`date +%Y%m%d` >> ${VARS_FILE}

[ "${WIZARD_JRE_CP}" == "true" ] && echo JAVACOMMON=${JRE_PATH}/bin/java >> ${VARS_FILE}

[ "${WIZARD_JRE_SYS}" == "true" ] && echo JAVACOMMON=\${JAVA_HOME}/bin/java >> ${VARS_FILE}

cat ${TEMP_FOLDER}/${CP_EXTRACTED_FOLDER}/install.defaults >> ${VARS_FILE}

#remove temp files

rm -r ${TEMP_FOLDER}/${CP_EXTRACTED_FOLDER}

#add firewall config

/usr/syno/bin/servicetool --install-configure-file --package /var/packages/${SYNOPKG_PKGNAME}/scripts/${SYNOPKG_PKGNAME}.sc > /dev/null

#amend CrashPlanPROe client version

[ "${SYNOPKG_PKGNAME}" == "CrashPlanPROe" ] && sed -i -r "s/^version=\".*(-.*$)/version=\"${CPPROE_VER}\1/" /var/packages/${SYNOPKG_PKGNAME}/INFO

#are we transitioning an existing CrashPlan account to CrashPlan For Small Business?

if [ "${SYNOPKG_PKGNAME}" == "CrashPlanPRO" ]; then

if [ -e /var/packages/CrashPlan/scripts/start-stop-status ]; then

/var/packages/CrashPlan/scripts/start-stop-status stop

cp /var/lib/crashplan/.identity ${PUBLIC_FOLDER}/crashplan-identity.bak

cp -R /var/packages/CrashPlan/target/conf/ ${OPTDIR}/

fi

fi

exit 0

}

preuninst ()

{

`dirname $0`/stop-start-status stop

exit 0

}

postuninst ()

{

if [ -f ${SYNOPKG_PKGDEST}/syno_package.vars ]; then

source ${SYNOPKG_PKGDEST}/syno_package.vars

fi

[ -e ${OPTDIR}/lib/libffi.so.5 ] && rm ${OPTDIR}/lib/libffi.so.5

#delete symlink if it no longer resolves - PowerPC only

if [ ! -e /lib/libffi.so.5 ]; then

[ -L /lib/libffi.so.5 ] && rm /lib/libffi.so.5

fi

#remove firewall config

if [ "${SYNOPKG_PKG_STATUS}" == "UNINSTALL" ]; then

/usr/syno/bin/servicetool --remove-configure-file --package ${SYNOPKG_PKGNAME}.sc > /dev/null

fi

exit 0

}

preupgrade ()

{

`dirname $0`/stop-start-status stop

pre_checks

#if identity exists back up config

if [ -f /var/lib/crashplan/.identity ]; then

mkdir -p ${SYNOPKG_PKGDEST}/../${SYNOPKG_PKGNAME}_data_mig/conf

for FILE_TO_MIGRATE in ${UPGRADE_FILES}; do

if [ -f ${OPTDIR}/${FILE_TO_MIGRATE} ]; then

cp ${OPTDIR}/${FILE_TO_MIGRATE} ${SYNOPKG_PKGDEST}/../${SYNOPKG_PKGNAME}_data_mig/${FILE_TO_MIGRATE}

fi

done

for FOLDER_TO_MIGRATE in ${UPGRADE_FOLDERS}; do

if [ -d ${OPTDIR}/${FOLDER_TO_MIGRATE} ]; then

mv ${OPTDIR}/${FOLDER_TO_MIGRATE} ${SYNOPKG_PKGDEST}/../${SYNOPKG_PKGNAME}_data_mig

fi

done

fi

exit 0

}

postupgrade ()

{

#use the migrated identity and config data from the previous version

if [ -f ${SYNOPKG_PKGDEST}/../${SYNOPKG_PKGNAME}_data_mig/conf/my.service.xml ]; then

for FILE_TO_MIGRATE in ${UPGRADE_FILES}; do

if [ -f ${SYNOPKG_PKGDEST}/../${SYNOPKG_PKGNAME}_data_mig/${FILE_TO_MIGRATE} ]; then

mv ${SYNOPKG_PKGDEST}/../${SYNOPKG_PKGNAME}_data_mig/${FILE_TO_MIGRATE} ${OPTDIR}/${FILE_TO_MIGRATE}

fi

done

for FOLDER_TO_MIGRATE in ${UPGRADE_FOLDERS}; do

if [ -d ${SYNOPKG_PKGDEST}/../${SYNOPKG_PKGNAME}_data_mig/${FOLDER_TO_MIGRATE} ]; then

mv ${SYNOPKG_PKGDEST}/../${SYNOPKG_PKGNAME}_data_mig/${FOLDER_TO_MIGRATE} ${OPTDIR}

fi

done

rmdir ${SYNOPKG_PKGDEST}/../${SYNOPKG_PKGNAME}_data_mig/conf

rmdir ${SYNOPKG_PKGDEST}/../${SYNOPKG_PKGNAME}_data_mig

#make CrashPlan log entry

TIMESTAMP="`date "+%D %I:%M%p"`"

echo "I ${TIMESTAMP} Synology Package Center updated ${SYNOPKG_PKGNAME} to version ${SYNOPKG_PKGVER}" >> ${LOG_FILE}

fi

exit 0

}

start-stop-status.sh

#!/bin/sh

#--------CRASHPLAN start-stop-status script

#--------package maintained at pcloadletter.co.uk

TEMP_FOLDER="`find / -maxdepth 2 -path '/volume?/@tmp' | head -n 1`"

MANIFEST_FOLDER="/`echo $TEMP_FOLDER | cut -f2 -d'/'`/crashplan"

ENGINE_CFG="run.conf"

PKG_FOLDER="`dirname $0 | cut -f1-4 -d'/'`"

DNAME="`dirname $0 | cut -f4 -d'/'`"

OPTDIR="${PKG_FOLDER}/target"

PID_FILE="${OPTDIR}/${DNAME}.pid"

DLOG="${OPTDIR}/log/history.log.0"

CFG_PARAM="SRV_JAVA_OPTS"

JAVA_MIN_HEAP=`grep "^${CFG_PARAM}=" "${OPTDIR}/bin/${ENGINE_CFG}" | sed -r "s/^.*-Xms([0-9]+)[Mm] .*$/\1/"`

SYNO_CPU_ARCH="`uname -m`"

TIMESTAMP="`date "+%D %I:%M%p"`"

FULL_CP="${OPTDIR}/lib/com.backup42.desktop.jar:${OPTDIR}/lang"

source ${OPTDIR}/install.vars

source /etc/profile

source /root/.profile

start_daemon ()

{

#check persistent variables from syno_package.vars

USR_MAX_HEAP=0

if [ -f ${OPTDIR}/syno_package.vars ]; then

source ${OPTDIR}/syno_package.vars

fi

USR_MAX_HEAP=`echo $USR_MAX_HEAP | sed -e "s/[mM]//"`

#do we need to restore the identity file - has a DSM upgrade scrubbed /var/lib/crashplan?

if [ ! -e /var/lib/crashplan ]; then

mkdir /var/lib/crashplan

[ -e ${OPTDIR}/conf/var-backup/.identity ] && cp ${OPTDIR}/conf/var-backup/.identity /var/lib/crashplan/

fi

#fix up some of the binary paths and fix some command syntax for busybox

#moved this to start-stop-status.sh from installer.sh because Code42 push updates and these

#new scripts will need this treatment too

find ${OPTDIR}/ -name "*.sh" | while IFS="" read -r FILE_TO_EDIT; do

if [ -e ${FILE_TO_EDIT} ]; then

#this list of substitutions will probably need expanding as new CrashPlan updates are released

sed -i "s%^#!/bin/bash%#!$/bin/sh%" "${FILE_TO_EDIT}"

sed -i -r "s%(^\s*)(/bin/cpio |cpio ) %\1/${OPTDIR}/bin/cpio %" "${FILE_TO_EDIT}"

sed -i -r "s%(^\s*)(/bin/ps|ps) [^w][^\|]*\|%\1/bin/ps w \|%" "${FILE_TO_EDIT}"

sed -i -r "s%\`ps [^w][^\|]*\|%\`ps w \|%" "${FILE_TO_EDIT}"

sed -i -r "s%^ps [^w][^\|]*\|%ps w \|%" "${FILE_TO_EDIT}"

sed -i "s/rm -fv/rm -f/" "${FILE_TO_EDIT}"

sed -i "s/mv -fv/mv -f/" "${FILE_TO_EDIT}"

fi

done

#use this daemon init script rather than the unreliable Code42 stock one which greps the ps output

sed -i "s%^ENGINE_SCRIPT=.*$%ENGINE_SCRIPT=$0%" ${OPTDIR}/bin/restartLinux.sh

#any downloaded upgrade script will usually have failed despite the above changes

#so ignore the script and explicitly extract the new java code using the chrisnelson.ca method

#thanks to Jeff Bingham for tweaks

UPGRADE_JAR=`find ${OPTDIR}/upgrade -maxdepth 1 -name "*.jar" | tail -1`

if [ -n "${UPGRADE_JAR}" ]; then

rm ${OPTDIR}/*.pid > /dev/null

#make CrashPlan log entry

echo "I ${TIMESTAMP} Synology extracting upgrade from ${UPGRADE_JAR}" >> ${DLOG}

UPGRADE_VER=`echo ${SCRIPT_HOME} | sed -r "s/^.*\/([0-9_]+)\.[0-9]+/\1/"`

#DSM 6.0 no longer includes unzip, use 7z instead

unzip -o ${OPTDIR}/upgrade/${UPGRADE_VER}.jar "*.jar" -d ${OPTDIR}/lib/ || 7z e -y ${OPTDIR}/upgrade/${UPGRADE_VER}.jar "*.jar" -o${OPTDIR}/lib/ > /dev/null

unzip -o ${OPTDIR}/upgrade/${UPGRADE_VER}.jar "lang/*" -d ${OPTDIR} || 7z e -y ${OPTDIR}/upgrade/${UPGRADE_VER}.jar "lang/*" -o${OPTDIR} > /dev/null

mv ${UPGRADE_JAR} ${TEMP_FOLDER}/ > /dev/null

exec $0

fi

#updates may also overwrite our native binaries

[ -e ${OPTDIR}/bin/libffi.so.5 ] && cp -f ${OPTDIR}/bin/libffi.so.5 ${OPTDIR}/lib/

[ -e ${OPTDIR}/bin/libjtux.so ] && cp -f ${OPTDIR}/bin/libjtux.so ${OPTDIR}/

[ -e ${OPTDIR}/bin/jna-3.2.5.jar ] && cp -f ${OPTDIR}/bin/jna-3.2.5.jar ${OPTDIR}/lib/

if [ -e ${OPTDIR}/bin/jna.jar ] && [ -e ${OPTDIR}/lib/jna.jar ]; then

cp -f ${OPTDIR}/bin/jna.jar ${OPTDIR}/lib/

fi

#create or repair libffi.so.5 symlink if a DSM upgrade has removed it - PowerPC only

if [ -e ${OPTDIR}/lib/libffi.so.5 ]; then

if [ ! -e /lib/libffi.so.5 ]; then

#if it doesn't exist, but is still a link then it's a broken link and should be deleted first

[ -L /lib/libffi.so.5 ] && rm /lib/libffi.so.5

ln -s ${OPTDIR}/lib/libffi.so.5 /lib/libffi.so.5

fi

fi

#set appropriate Java max heap size

RAM=$((`free | grep Mem: | sed -e "s/^ *Mem: *\([0-9]*\).*$/\1/"`/1024))

if [ $RAM -le 128 ]; then

JAVA_MAX_HEAP=80

elif [ $RAM -le 256 ]; then

JAVA_MAX_HEAP=192

elif [ $RAM -le 512 ]; then

JAVA_MAX_HEAP=384

elif [ $RAM -le 1024 ]; then

JAVA_MAX_HEAP=512

elif [ $RAM -gt 1024 ]; then

JAVA_MAX_HEAP=1024

fi

if [ $USR_MAX_HEAP -gt $JAVA_MAX_HEAP ]; then

JAVA_MAX_HEAP=${USR_MAX_HEAP}

fi

if [ $JAVA_MAX_HEAP -lt $JAVA_MIN_HEAP ]; then

#can't have a max heap lower than min heap (ARM low RAM systems)

$JAVA_MAX_HEAP=$JAVA_MIN_HEAP

fi

sed -i -r "s/(^${CFG_PARAM}=.*) -Xmx[0-9]+[mM] (.*$)/\1 -Xmx${JAVA_MAX_HEAP}m \2/" "${OPTDIR}/bin/${ENGINE_CFG}"

#disable the use of the x86-optimized external Fast MD5 library if running on ARM and PPC CPUs

#seems to be the default behaviour now but that may change again

[ "${SYNO_CPU_ARCH}" == "x86_64" ] && SYNO_CPU_ARCH="i686"

if [ "${SYNO_CPU_ARCH}" != "i686" ]; then

grep "^${CFG_PARAM}=.*c42\.native\.md5\.enabled" "${OPTDIR}/bin/${ENGINE_CFG}" > /dev/null \

|| sed -i -r "s/(^${CFG_PARAM}=\".*)\"$/\1 -Dc42.native.md5.enabled=false\"/" "${OPTDIR}/bin/${ENGINE_CFG}"

fi

#move the Java temp directory from the default of /tmp

grep "^${CFG_PARAM}=.*Djava\.io\.tmpdir" "${OPTDIR}/bin/${ENGINE_CFG}" > /dev/null \

|| sed -i -r "s%(^${CFG_PARAM}=\".*)\"$%\1 -Djava.io.tmpdir=${TEMP_FOLDER}\"%" "${OPTDIR}/bin/${ENGINE_CFG}"

#now edit the XML config file, which only exists after first run

if [ -f ${OPTDIR}/conf/my.service.xml ]; then

#allow direct connections from CrashPlan Desktop client on remote systems

#you must edit the value of serviceHost in conf/ui.properties on the client you connect with

#users report that this value is sometimes reset so now it's set every service startup

sed -i "s/<serviceHost>127\.0\.0\.1<\/serviceHost>/<serviceHost>0\.0\.0\.0<\/serviceHost>/" "${OPTDIR}/conf/my.service.xml"

#default changed in CrashPlan 4.3

sed -i "s/<serviceHost>localhost<\/serviceHost>/<serviceHost>0\.0\.0\.0<\/serviceHost>/" "${OPTDIR}/conf/my.service.xml"

#since CrashPlan 4.4 another config file to allow remote console connections

sed -i "s/127\.0\.0\.1/0\.0\.0\.0/" /var/lib/crashplan/.ui_info

#this change is made only once in case you want to customize the friends' backup location

if [ "${MANIFEST_PATH_SET}" != "True" ]; then

#keep friends' backup data outside the application folder to make accidental deletion less likely

sed -i "s%<manifestPath>.*</manifestPath>%<manifestPath>${MANIFEST_FOLDER}/backupArchives/</manifestPath>%" "${OPTDIR}/conf/my.service.xml"

echo "MANIFEST_PATH_SET=True" >> ${OPTDIR}/syno_package.vars

fi

#since CrashPlan version 3.5.3 the value javaMemoryHeapMax also needs setting to match that used in bin/run.conf

sed -i -r "s%(<javaMemoryHeapMax>)[0-9]+[mM](</javaMemoryHeapMax>)%\1${JAVA_MAX_HEAP}m\2%" "${OPTDIR}/conf/my.service.xml"

#make sure CrashPlan is not binding to the IPv6 stack

grep "\-Djava\.net\.preferIPv4Stack=true" "${OPTDIR}/bin/${ENGINE_CFG}" > /dev/null \

|| sed -i -r "s/(^${CFG_PARAM}=\".*)\"$/\1 -Djava.net.preferIPv4Stack=true\"/" "${OPTDIR}/bin/${ENGINE_CFG}"

else

echo "Check the package log to ensure the package has started successfully, then stop and restart the package to allow desktop client connections." > "${SYNOPKG_TEMP_LOGFILE}"

fi

#increase the system-wide maximum number of open files from Synology default of 24466

[ `cat /proc/sys/fs/file-max` -lt 65536 ] && echo "65536" > /proc/sys/fs/file-max

#raise the maximum open file count from the Synology default of 1024 - thanks Casper K. for figuring this out

#http://support.code42.com/Administrator/3.6_And_4.0/Troubleshooting/Too_Many_Open_Files

ulimit -n 65536

#ensure that Code 42 have not amended install.vars to force the use of their own (Intel) JRE

if [ -e ${OPTDIR}/jre-syno ]; then

JRE_PATH="`find ${OPTDIR}/jre-syno/ -name jre`"

[ -z ${JRE_PATH} ] && JRE_PATH=${OPTDIR}/jre-syno

sed -i -r "s|^(JAVACOMMON=).*$|\1\${JRE_PATH}/bin/java|" ${OPTDIR}/install.vars

#if missing, set timezone and locale for dedicated JRE

if [ -z ${TZ} ]; then

SYNO_TZ=`cat /etc/synoinfo.conf | grep timezone | cut -f2 -d'"'`

#fix for DST time in DSM 5.2 thanks to MinimServer Syno package author

[ -e /usr/share/zoneinfo/Timezone/synotztable.json ] \

&& SYNO_TZ=`jq ".${SYNO_TZ} | .nameInTZDB" /usr/share/zoneinfo/Timezone/synotztable.json | sed -e "s/\"//g"` \

|| SYNO_TZ=`grep "^${SYNO_TZ}" /usr/share/zoneinfo/Timezone/tzname | sed -e "s/^.*= //"`

export TZ=${SYNO_TZ}

fi

[ -z ${LANG} ] && export LANG=en_US.utf8

export CLASSPATH=.:${OPTDIR}/jre-syno/lib

else

sed -i -r "s|^(JAVACOMMON=).*$|\1\${JAVA_HOME}/bin/java|" ${OPTDIR}/install.vars

fi

source ${OPTDIR}/bin/run.conf

source ${OPTDIR}/install.vars

cd ${OPTDIR}

$JAVACOMMON $SRV_JAVA_OPTS -classpath $FULL_CP com.backup42.service.CPService > ${OPTDIR}/log/engine_output.log 2> ${OPTDIR}/log/engine_error.log &

if [ $! -gt 0 ]; then

echo $! > $PID_FILE

renice 19 $! > /dev/null

if [ -z "${SYNOPKG_PKGDEST}" ]; then

#script was manually invoked, need this to show status change in Package Center

[ -e ${PKG_FOLDER}/enabled ] || touch ${PKG_FOLDER}/enabled

fi

else

echo "${DNAME} failed to start, check ${OPTDIR}/log/engine_error.log" > "${SYNOPKG_TEMP_LOGFILE}"

echo "${DNAME} failed to start, check ${OPTDIR}/log/engine_error.log" >&2

exit 1

fi

}

stop_daemon ()

{

echo "I ${TIMESTAMP} Stopping ${DNAME}" >> ${DLOG}

kill `cat ${PID_FILE}`

wait_for_status 1 20 || kill -9 `cat ${PID_FILE}`

rm -f ${PID_FILE}

if [ -z ${SYNOPKG_PKGDEST} ]; then

#script was manually invoked, need this to show status change in Package Center

[ -e ${PKG_FOLDER}/enabled ] && rm ${PKG_FOLDER}/enabled

fi

#backup identity file in case DSM upgrade removes it

[ -e ${OPTDIR}/conf/var-backup ] || mkdir ${OPTDIR}/conf/var-backup

cp /var/lib/crashplan/.identity ${OPTDIR}/conf/var-backup/

}

daemon_status ()

{

if [ -f ${PID_FILE} ] && kill -0 `cat ${PID_FILE}` > /dev/null 2>&1; then

return

fi

rm -f ${PID_FILE}

return 1

}

wait_for_status ()

{

counter=$2

while [ ${counter} -gt 0 ]; do

daemon_status

[ $? -eq $1 ] && return

let counter=counter-1

sleep 1

done

return 1

}

case $1 in

start)

if daemon_status; then

echo ${DNAME} is already running with PID `cat ${PID_FILE}`

exit 0

else

echo Starting ${DNAME} ...

start_daemon

exit $?

fi

;;

stop)

if daemon_status; then

echo Stopping ${DNAME} ...

stop_daemon

exit $?

else

echo ${DNAME} is not running

exit 0

fi

;;

restart)

stop_daemon

start_daemon

exit $?

;;

status)

if daemon_status; then

echo ${DNAME} is running with PID `cat ${PID_FILE}`

exit 0

else

echo ${DNAME} is not running

exit 1

fi

;;

log)

echo "${DLOG}"

exit 0

;;

*)

echo "Usage: $0 {start|stop|status|restart}" >&2

exit 1

;;

esac

install_uifile & upgrade_uifile

[

{

"step_title": "Client Version Selection",

"items": [

{

"type": "singleselect",

"desc": "Please select the CrashPlanPROe client version that is appropriate for your backup destination server:",

"subitems": [

{

"key": "WIZARD_VER_483",

"desc": "4.8.3",

"defaultValue": true

}, {

"key": "WIZARD_VER_480",

"desc": "4.8.0",

"defaultValue": false

},

{

"key": "WIZARD_VER_470",

"desc": "4.7.0",

"defaultValue": false

},

{

"key": "WIZARD_VER_460",

"desc": "4.6.0",

"defaultValue": false

},

{

"key": "WIZARD_VER_452",

"desc": "4.5.2",

"defaultValue": false

},

{

"key": "WIZARD_VER_450",

"desc": "4.5.0",

"defaultValue": false

},

{

"key": "WIZARD_VER_441",

"desc": "4.4.1",

"defaultValue": false

},

{

"key": "WIZARD_VER_430",

"desc": "4.3.0",

"defaultValue": false

},

{

"key": "WIZARD_VER_420",

"desc": "4.2.0",

"defaultValue": false

},

{

"key": "WIZARD_VER_370",

"desc": "3.7.0",

"defaultValue": false

},

{

"key": "WIZARD_VER_364",

"desc": "3.6.4",

"defaultValue": false

},

{

"key": "WIZARD_VER_363",

"desc": "3.6.3",

"defaultValue": false

},

{

"key": "WIZARD_VER_3614",

"desc": "3.6.1.4",

"defaultValue": false

},

{

"key": "WIZARD_VER_353",

"desc": "3.5.3",

"defaultValue": false

},

{

"key": "WIZARD_VER_341",

"desc": "3.4.1",

"defaultValue": false

},

{

"key": "WIZARD_VER_33",

"desc": "3.3",

"defaultValue": false

}

]

}

]

},

{

"step_title": "Java Runtime Environment Selection",

"items": [

{

"type": "singleselect",

"desc": "Please select the Java version which you would like CrashPlan to use:",

"subitems": [

{

"key": "WIZARD_JRE_SYS",

"desc": "Default system Java version",

"defaultValue": false

},

{

"key": "WIZARD_JRE_CP",

"desc": "Dedicated installation of Java 8",

"defaultValue": true

}

]

}

]

}

]

Changelog:

- 0047 30/Oct/17 – Updated dedicated Java version to 8 update 151, added support for additional Intel CPUs in x18 Synology products.

- 0046 26/Aug/17 – Updated to CrashPlan PRO 4.9, added support for migration from CrashPlan For Home to CrashPlan For Small Business (CrashPlan PRO). Please read the Migration section on this page for instructions.

- 0045 02/Aug/17 – Updated to CrashPlan 4.8.3, updated dedicated Java version to 8 update 144

- 0044 21/Jan/17 – Updated dedicated Java version to 8 update 121

- 0043 07/Jan/17 – Updated dedicated Java version to 8 update 111, added support for Intel Broadwell and Grantley CPUs

- 0042 03/Oct/16 – Updated to CrashPlan 4.8.0, Java 8 is now required, added optional dedicated Java 8 Runtime instead of the default system one including 64bit Java support on 64 bit Intel CPUs to permit memory allocation larger than 4GB. Support for non-Intel platforms withdrawn owing to Code42’s reliance on proprietary native code library libc42archive.so

- 0041 20/Jul/16 – Improved auto-upgrade compatibility (hopefully), added option to have CrashPlan use a dedicated Java 7 Runtime instead of the default system one, including 64bit Java support on 64 bit Intel CPUs to permit memory allocation larger than 4GB

- 0040 25/May/16 – Added cpio to the path in the running context of start-stop-status.sh

- 0039 25/May/16 – Updated to CrashPlan 4.7.0, at each launch forced the use of the system JRE over the CrashPlan bundled Intel one, added Maven build of JNA 4.1.0 for ARMv7 systems consistent with the version bundled with CrashPlan

- 0038 27/Apr/16 – Updated to CrashPlan 4.6.0, and improved support for Code 42 pushed updates

- 0037 21/Jan/16 – Updated to CrashPlan 4.5.2

- 0036 14/Dec/15 – Updated to CrashPlan 4.5.0, separate firewall definitions for management client and for friends backup, added support for DS716+ and DS216play

- 0035 06/Nov/15 – Fixed the update to 4.4.1_59, new installs now listen for remote connections after second startup (was broken from 4.4), updated client install documentation with more file locations and added a link to a new Code42 support doc

EITHER completely remove and reinstall the package (which will require a rescan of the entire backup set) OR alternatively please delete all except for one of the failed upgrade numbered subfolders in /var/packages/CrashPlan/target/upgrade before upgrading. There will be one folder for each time CrashPlan tried and failed to start since Code42 pushed the update - 0034 04/Oct/15 – Updated to CrashPlan 4.4.1, bundled newer JNA native libraries to match those from Code42, PLEASE READ UPDATED BLOG POST INSTRUCTIONS FOR CLIENT INSTALL this version introduced yet another requirement for the client

- 0033 12/Aug/15 – Fixed version 0032 client connection issue for fresh installs

- 0032 12/Jul/15 – Updated to CrashPlan 4.3, PLEASE READ UPDATED BLOG POST INSTRUCTIONS FOR CLIENT INSTALL this version introduced an extra requirement, changed update repair to use the chrisnelson.ca method, forced CrashPlan to prefer IPv4 over IPv6 bindings, removed some legacy version migration scripting, updated main blog post documentation

- 0031 20/May/15 – Updated to CrashPlan 4.2, cross compiled a newer cpio binary for some architectures which were segfaulting while unpacking main CrashPlan archive, added port 4242 to the firewall definition (friend backups), package is now signed with repository private key

- 0030 16/Feb/15 – Fixed show-stopping issue with version 0029 for systems with more than one volume

- 0029 21/Jan/15 – Updated to CrashPlan version 3.7.0, improved detection of temp folder (prevent use of /var/@tmp), added support for Annapurna Alpine AL514 CPU (armhf) in DS2015xs, added support for Marvell Armada 375 CPU (armhf) in DS215j, abandoned practical efforts to try to support Code42’s upgrade scripts, abandoned inotify support (realtime backup) on PowerPC after many failed attempts with self-built and pre-built jtux and jna libraries, back-merged older libffi support for old PowerPC binaries after it was removed in 0028 re-write

- 0028 22/Oct/14 – Substantial re-write:

Updated to CrashPlan version 3.6.4

DSM 5.0 or newer is now required

libjnidispatch.so taken from Debian JNA 3.2.7 package with dependency on newer libffi.so.6 (included in DSM 5.0)

jna-3.2.5.jar emptied of irrelevant CPU architecture libs to reduce size

Increased default max heap size from 512MB to 1GB on systems with more than 1GB RAM

Intel CPUs no longer need the awkward glibc version-faking shim to enable inotify support (for real-time backup)

Switched to using root account – no more adding account permissions for backup, package upgrades will no longer break this

DSM Firewall application definition added

Tested with DSM Task Scheduler to allow backups between certain times of day only, saving RAM when not in use

Daemon init script now uses a proper PID file instead of Code42’s unreliable method of using grep on the output of ps

Daemon init script can be run from the command line

Removal of bash binary dependency now Code42’s CrashPlanEngine script is no longer used

Removal of nice binary dependency, using BusyBox equivalent renice

Unified ARMv5 and ARMv7 external binary package (armle)

Added support for Mindspeed Comcerto 2000 CPU (comcerto2k – armhf) in DS414j

Added support for Intel Atom C2538 (avoton) CPU in DS415+

Added support to choose which version of CrashPlan PROe client to download, since some servers may still require legacy versions

Switched to .tar.xz compression for native binaries to reduce web hosting footprint - 0027 20/Mar/14 – Fixed open file handle limit for very large backup sets (ulimit fix)

- 0026 16/Feb/14 – Updated all CrashPlan clients to version 3.6.3, improved handling of Java temp files

- 0025 30/Jan/14 – glibc version shim no longer used on Intel Synology models running DSM 5.0

- 0024 30/Jan/14 – Updated to CrashPlan PROe 3.6.1.4 and added support for PowerPC 2010 Synology models running DSM 5.0

- 0023 30/Jan/14 – Added support for Intel Atom Evansport and Armada XP CPUs in new DSx14 products

- 0022 10/Jun/13 – Updated all CrashPlan client versions to 3.5.3, compiled native binary dependencies to add support for Armada 370 CPU (DS213j), start-stop-status.sh now updates the new javaMemoryHeapMax value in my.service.xml to the value defined in syno_package.vars

- 0021 01/Mar/13 – Updated CrashPlan to version 3.5.2

- 0020 21/Jan/13 – Fixes for DSM 4.2

- 018 Updated CrashPlan PRO to version 3.4.1

- 017 Updated CrashPlan and CrashPlan PROe to version 3.4.1, and improved in-app update handling

- 016 Added support for Freescale QorIQ CPUs in some x13 series Synology models, and installer script now downloads native binaries separately to reduce repo hosting bandwidth, PowerQUICC PowerPC processors in previous Synology generations with older glibc versions are not supported

- 015 Added support for easy scheduling via cron – see updated Notes section

- 014 DSM 4.1 user profile permissions fix

- 013 implemented update handling for future automatic updates from Code 42, and incremented CrashPlanPRO client to release version 3.2.1

- 012 incremented CrashPlanPROe client to release version 3.3

- 011 minor fix to allow a wildcard on the cpio archive name inside the main installer package (to fix CP PROe client since Code 42 Software had amended the cpio file version to 3.2.1.2)

- 010 minor bug fix relating to daemon home directory path

- 009 rewrote the scripts to be even easier to maintain and unified as much as possible with my imminent CrashPlan PROe server package, fixed a timezone bug (tightened regex matching), moved the script-amending logic from installer.sh to start-stop-status.sh with it now applying to all .sh scripts each startup so perhaps updates from Code42 might work in future, if wget fails to fetch the installer from Code42 the installer will look for the file in the public shared folder

- 008 merged the 14 package scripts each (7 for ARM, 7 for Intel) for CP, CP PRO, & CP PROe – 42 scripts in total – down to just two! ARM & Intel are now supported by the same package, Intel synos now have working inotify support (Real-Time Backup) thanks to rwojo’s shim to pass the glibc version check, upgrade process now retains login, cache and log data (no more re-scanning), users can specify a persistent larger max heap size for very large backup sets

- 007 fixed a bug that broke CrashPlan if the Java folder moved (if you changed version)

- 006 installation now fails without User Home service enabled, fixed Daylight Saving Time support, automated replacing the ARM libffi.so symlink which is destroyed by DSM upgrades, stopped assuming the primary storage volume is /volume1, reset ownership on /var/lib/crashplan and the Friends backup location after installs and upgrades

- 005 added warning to restart daemon after 1st run, and improved upgrade process again

- 004 updated to CrashPlan 3.2.1 and improved package upgrade process, forced binding to 0.0.0.0 each startup

- 003 fixed ownership of /volume1/crashplan folder

- 002 updated to CrashPlan 3.2

- 001 30/Jan/12 – intial public release

I have released a new version which fixes the missing daemon user account issue on DSM 4.2

Hi Patters! I run with the previous version (018) on DSM 4.2 Beta, after installing Perl and fixing some access issues for CrashPlan (first CP only had access to first level directory on Homes directories) it works like a charm… BUT… I noticed when trying to fix the access rights that all users have read access to all Homes directories (“Homes” hidden in “Network”) if they know how to find it (i.e. \\server\homes\user-x). Normal access to Home diretory of course via \\server\home. I have tried to fix this but have not succeeded, if I remove the group “users” access to directories under “homes” the owner will not have write access… Any chance you can point me to the appropriate settings (per User, per Group, Shared Directories and File Station)? Many thanks!

Hi Johan,

How did you configure the permissions on your Syno? My CrashPlan can see the folders in /homes but not the content (expect for the CrashPlan home dir). Making the CrashPlan user (temporary) root in /etc/group didn”t solve my issue :-(

Thanks in advance!

Hi Ingmar,

The different user directories under “Homes” – the owner have Read, Write and Execute.

Others have Read and Execute.

On the directory “Homes” – No Access for everyone except Crashplan and Admin that have Read and Write.

User group “Users” have Read and Write acces to “Homes”

User “Crashplan” have Read and Write to all needed directories.

Hope this helps!

Johan

That helped, thanx!

Patters,

I have been struggling with keeping the client up and running. After a few minutes it “disconnects” from the engine. I have been following the last few posts and uninstalled the client completely, reinstalled and such, with no luck.

I am running CP-PRO, DS1010+, 4.2 and Java Manager. There is 3GB ram in the DS, and I have 1GB allocated for heap.

I do not know where to go from here.

Thanks,

Charlie-

How much data are you trying to backup? And what does the log say?

I have 3.3TB total but am down to the last 175GB. It has taken almost 8 months to get this far. Was running OK (but slow), prior to the updates.

Hi again! I think I fixed the access issue, i.e. added “No Access” to “homes” for all users, now they can only access the directory where they are set as owner. THOUGH… I went into “File Station/File Browser” and changed properties on “homes” and set “No access” for all individual Users, now I can’t even SEE “homes” in “File Station” even though I logged in as Admin!!! How can I reverse this so I can see “homes” in File Station” as Admin?

Thanks!

When I log in to DSM as “crashplan” user (with admin priviligies) I can’t find File Station and subsequently not see if CrashPlan can still see “homes”… When checking from CP GUI on my PC I can browse all directories within “homes” so I guess it can access homes anyway… but why can’t Admin do that?

I can now start File Station when logged in as “crashplan” user but can’t still see “homes” in File Station… Do I need to re-install DSM (starting from scratch again) or will it be enough to disable “User Home Service” and enable it again? This will not remove the directories and files but will it reset the priviligies?

RESOLVED! I had by mistake set “Users” group with “No access” to homes, and since Admin is also part of Users… :-)

Same issue as charlie… since a few days, the server stops and starts constantly… and the client disconnects after one or two minutes… reinstalling the whole thing did not help…

thx,

Fredi

Maybe I should add some more information:

– DSM 4.2 BETA

– DS 1511+, 3GB RAM

– approx. 1 TB of data… 80% completed

The issue just started to happen recently: The service starts to backup and suddenly it stops and re-starts automatically… no special comment in the Log except, that it has stopped and restarted again. That way the backup is still running.. but very very slow.

Interesting, the client quits with an error message as soon as the service stops.

This is the same problem that I’m also facing (see my post). Good to see that it’s nothing hardware-specific…

.. and I am using the java package distributed by Synology in DSM 4.2. Do I have to revert back to your java package?

Here an example from the log file (history.log.0, the error log file is empty):

I 02/13/13 06:20PM Backup scheduled to always run

I 02/13/13 06:20PM [Default] Starting backup to CrashPlan Central: 21,124 files (188GB) to back up

I 02/13/13 06:22PM CrashPlan started

I 02/13/13 06:22PM Backup scheduled to always run

I 02/13/13 06:22PM [Default] Starting backup to CrashPlan Central: 21,124 files (188GB) to back up

I 02/13/13 06:23PM CrashPlan started

I 02/13/13 06:23PM Backup scheduled to always run

I 02/13/13 06:24PM [Default] Starting backup to CrashPlan Central: 21,124 files (188GB) to back up

I 02/13/13 06:25PM CrashPlan started

I 02/13/13 06:25PM Backup scheduled to always run

I 02/13/13 06:25PM [Default] Starting backup to CrashPlan Central: 21,124 files (188GB) to back up

So finally I found it.. in the service log file:

com.code42.exception.DebugException: OutOfMemoryError occurred…RESTARTING! message=OutOfMemoryError in BackupQueue!

It happens approx. every 3000 lines in the log file.

Any hint what the cause could be?

How big is your heap size??

My heap size is 1024MB.

You’ll certainly need more than 1GB of heap to protect that amount of data. I’d advise upgrading the RAM of your Syno to 3GB if you haven’t already (assuming it’s an Intel one with a spare DIMM slot).

I found a description for the solution in the Synology Wiki where the manual installation of CrashPlan is described..

So mine is currently 512MB.. interesting when I want to increase it in run.conf it does overwrite the value after every start!

Any ideas? my intent is to increase it to -Xmx1536m

Above in the “Notes”, first article, you can find wich config file you have to change to increase the heap size.

you made my day.. incr. to 1.5 MB, is now running just fine. How could I’ve missed this note. Thanks a lot!

I have a DS411+II, 1GB, DSM 4.2-3161 using Patters “CrashPlan” and “Java SE for Embedded 6” packages.

USR_MAX_HEAP is set to 640M (not because I had problems – I just wanted to see if I could notice any change in memory use. I could not).

I also have a number of other SynoCommunity packages installed and running (e.g. SABnzbd, SickBeard, Transmission).

I am backing up 8TB of data using a CrashPlan+ Family Unlimited 4 year license and I have yet to run into memory issues.

I searched the 3 service.log files for “outofmemory” and found nothing (oldest file started logging 10. Dec. 2012).

I am wondering if it’s because I’m using Backup Sets.

I am using 5 prioritized sets with the following sizes: 11GB, 20GB, 50GB, 2.2TB, 6.3TB.

Sets 1-3 are fully backed up. Set 4 has reached appx 30% but still needs 75 days to complete. Set 5 has reached appx 5% but will probably never complete :-D

They are currently set to always run and to verify selection every day at 10:00.

It is primarily because I have so much data that I want it running as long as the NAS is turned on.

As I understand I should almost expect CrashPlan to crash with OutOfMemory trying to back up all this data using a heap of max 640GB, but Synology Resource Monitor says that it’s only using 28% CPU and 57% Memory and CrashPlan has been running stable for months.

The only issue I’m experiencing is that it’s really, really slow backing up media files, but as other files are backed up fast enough, I’m wondering if this is a built-in limitation (which is kind of understandable).

If you’re getting OutOfMemory errors, I’d suggest that you give Backup Sets a try.

Backup sets will also make sure that important directories/files are always backed up immediately.

I think the amount of memory required can vary quite a lot depending on whether you have fewer larger files versus millions of smaller ones.

Yes, that’s probably a factor. I’m backing up less than 70k files in total.

CrashPlan is however processing Backup Sets individually so it could be used to group the amount of files into something more manageable (although if we’re speaking of “millions” then I’d also go with a memory expansion).

I have 3GB in there (no possibility of putting a bigger ram chip in there?)?. I went and upped my heap to 1526M and now it appears to stay running. I will monitor it but presume that I am carving away the ram for the other apps to run smoothly? Can someone shed some light on this?

True, but 1.5GB for CrashPlan ought to be sufficient for your needs. Most other apps are pretty limited in their requirements (except Minecraft perhaps).

Charlie, 3 GB is a lot – you simply monitor it either via DSM or via Telnet. So far I never went above 2 GB usage… and this with quite a few apps running, indexing, etc.

I think the amount of req. heap really depends on the amount of files you are backing up.

Similar to another user, with CrashPlan 3.5.2-0021 the client disconnects after a brief time. Patters, you asked what the logs showed but you did not tell which logs to examine.

Making a guess, looking at backup_files.log.0 shows what looks like a long series of continuous restarts:

I 03/03/13 09:33PM 42 [Default] Starting backup to CrashPlan Central: 31,265 files (135GB) to back up

I 03/03/13 09:35PM 42 [Default] Starting backup to CrashPlan Central: 31,265 files (135GB) to back up

I 03/03/13 09:38PM 42 [Default] Starting backup to CrashPlan Central: 31,265 files (135GB) to back up

I 03/03/13 09:40PM 42 [Default] Starting backup to CrashPlan Central: 31,265 files (135GB) to back up

history.log.0 is similar:

I 03/03/13 09:32AM [Default] Starting backup to CrashPlan Central: 31,265 files (135GB) to back up

I 03/03/13 09:35AM CrashPlan started

I 03/03/13 09:35AM Backup scheduled to always run

I 03/03/13 09:35AM [Default] Starting backup to CrashPlan Central: 31,265 files (135GB) to back up

I 03/03/13 09:37AM CrashPlan started

I 03/03/13 09:37AM Backup scheduled to always run

I 03/03/13 09:37AM [Default] Starting backup to CrashPlan Central: 31,265 files (135GB) to back up

I 03/03/13 09:39AM CrashPlan started

There are several “OutOfMemoryError” messages (never used to get these), maybe this is what you want?

[03.03.13 09:59:18.205 ERROR QPub-BackupMgr backup42.service.backup.BackupController] OutOfMemoryError occurred…RESTARTING! message=OutOfMemoryError in BackupQueue! FileTodo[fileTodoIndex = FileTodoIndex[backupFile=BackupFile[8530c2a52c96d000f9215ab430319325, parent=4e4a83ecc72afa4c6b9e7bc2f1620242, type=1, sourcePath=/volume1/Media/.TemporaryItems], newFile=true, state=NORMAL, sourceLength=0, sourceLastMod=1362203169000], lastVersion = null, startTime = 1362333429559, doneAnalyzing = false, numSourceBytesAnalyzed = 0, doneSending = false, %completed = 100.00%, numSourceBytesCompleted = 0], source=BackupQueue[538812805227285202>42, running=t, #tasks=0, sets=[BackupFileTodoSet[backupSetId=1, guid=42, doneLoadingFiles=t, doneLoadingTasks=f, FileTodoSet@15191628[ path = /volume1/@appstore/CrashPlan/cache/cpft1_42, closed = false, hasLock = true, dataSize = 4630261, headerSize = 0], numTodos = 31265, numBytes = 135035892568, lastCommitTs = 0]]], env=BackupEnv[envTime = 1362333428956, near = false, todoSharedMemory = SharedMemory[b.length = 2359296, allocIndex = -1, freeIndex = 0, closed = false, waitingAllocLength = 0], taskSharedMemory = SharedMemory[b.length = 2359296, allocIndex = -1, freeIndex = 0, closed = false, waitingAllocLength = 0]], BackupQueue$TodoWorker@21411547[ threadName = BQTodoWkr-42, stopped = false, running = true, thread.isDaemon = false, thread.isAlive = true, thread = Thread[W13060368_BQTodoWkr-42,5,main]], BackupQueue$TaskWorker@26156009[ threadName = BQTaskWrk-42, stopped = false, running = true, thread.isDaemon = false, thread.isAlive = true, thread = Thread[W3571905_BQTaskWrk-42,5,main]]], oomStack=java.lang.OutOfMemoryError: Java heap space

at gnu.trove.map.hash.TIntLongHashMap.rehash(TIntLongHashMap.java:182)

at gnu.trove.impl.hash.THash.postInsertHook(THash.java:388)

at gnu.trove.map.hash.TIntLongHashMap.doPut(TIntLongHashMap.java:222)

at gnu.trove.map.hash.TIntLongHashMap.put(TIntLongHashMap.java:198)

at com.code42.backup.manifest.WeakIndex.index(WeakIndex.java:67)

at com.code42.backup.manifest.BlockLookupCache$WeakCache.buildIndex(BlockLookupCache.java:466)

at com.code42.backup.manifest.BlockLookupCache$WeakCache.containsWeak(BlockLookupCache.java:404)

at com.code42.backup.manifest.BlockLookupCache$WeakCache.access$800(BlockLookupCache.java:316)

at com.code42.backup.manifest.BlockLookupCache.containsWeak(BlockLookupCache.java:164)

at com.code42.backup.handler.BackupHandler.saveSpecialData(BackupHandler.java:512)

at com.code42.backup.handler.BackupHandler.saveMetadata(BackupHandler.java:442)

at com.code42.backup.handler.BackupHandler.executeSave(BackupHandler.java:227)

at com.code42.backup.save.BackupQueue.saveFile(BackupQueue.java:1328)

at com.code42.backup.save.BackupQueue.processTodo(BackupQueue.java:883)

at com.code42.backup.save.BackupQueue.addFileDirectly(BackupQueue.java:668)

at com.code42.backup.save.BackupQueue.addParentFileTodoIfNecessary(BackupQueue.java:1075)

at com.code42.backup.save.BackupQueue.processTodo(BackupQueue.java:816)

at com.code42.backup.save.BackupQueue.addFileDirectly(BackupQueue.java:668)

at com.code42.backup.save.BackupQueue.addParentFileTodoIfNecessary(BackupQueue.java:1075)

at com.code42.backup.save.BackupQueue.processTodo(BackupQueue.java:816)

at com.code42.backup.save.BackupQueue.access$800(BackupQueue.java:97)

at com.code42.backup.save.BackupQueue$TodoWorker.doWork(BackupQueue.java:1797)

at com.code42.utils.AWorker.run(AWorker.java:149)

at java.lang.Thread.run(Thread.java:662)

, com.code42.exception.DebugException: OutOfMemoryError occurred…RESTARTING! message=OutOfMemoryError in BackupQueue! FileTodo[fileTodoIndex = FileTodoIndex[backupFile=BackupFile[8530c2a52c96d000f9215ab430319325, parent=4e4a83ecc72afa4c6b9e7bc2f1620242, type=1, sourcePath=/volume1/Media/.TemporaryItems], newFile=true, state=NORMAL, sourceLength=0, sourceLastMod=1362203169000], lastVersion = null, startTime = 1362333429559, doneAnalyzing = false, numSourceBytesAnalyzed = 0, doneSending = false, %completed = 100.00%, numSourceBytesCompleted = 0], source=BackupQueue[538812805227285202>42, running=t, #tasks=0, sets=[BackupFileTodoSet[backupSetId=1, guid=42, doneLoadingFiles=t, doneLoadingTasks=f, FileTodoSet@15191628[ path = /volume1/@appstore/CrashPlan/cache/cpft1_42, closed = false, hasLock = true, dataSize = 4630261, headerSize = 0], numTodos = 31265, numBytes = 135035892568, lastCommitTs = 0]]], env=BackupEnv[envTime = 1362333428956, near = false, todoSharedMemory = SharedMemory[b.length = 2359296, allocIndex = -1, freeIndex = 0, closed = false, waitingAllocLength = 0], taskSharedMemory = SharedMemory[b.length = 2359296, allocIndex = -1, freeIndex = 0, closed = false, waitingAllocLength = 0]], BackupQueue$TodoWorker@21411547[ threadName = BQTodoWkr-42, stopped = false, running = true, thread.isDaemon = false, thread.isAlive = true, thread = Thread[W13060368_BQTodoWkr-42,5,main]], BackupQueue$TaskWorker@26156009[ threadName = BQTaskWrk-42, stopped = false, running = true, thread.isDaemon = false, thread.isAlive = true, thread = Thread[W3571905_BQTaskWrk-42,5,main]]], oomStack=java.lang.OutOfMemoryError: Java heap space

at gnu.trove.map.hash.TIntLongHashMap.rehash(TIntLongHashMap.java:182)

at gnu.trove.impl.hash.THash.postInsertHook(THash.java:388)

at gnu.trove.map.hash.TIntLongHashMap.doPut(TIntLongHashMap.java:222)

at gnu.trove.map.hash.TIntLongHashMap.put(TIntLongHashMap.java:198)

at com.code42.backup.manifest.WeakIndex.index(WeakIndex.java:67)

at com.code42.backup.manifest.BlockLookupCache$WeakCache.buildIndex(BlockLookupCache.java:466)

at com.code42.backup.manifest.BlockLookupCache$WeakCache.containsWeak(BlockLookupCache.java:404)

at com.code42.backup.manifest.BlockLookupCache$WeakCache.access$800(BlockLookupCache.java:316)

at com.code42.backup.manifest.BlockLookupCache.containsWeak(BlockLookupCache.java:164)

at com.code42.backup.handler.BackupHandler.saveSpecialData(BackupHandler.java:512)

at com.code42.backup.handler.BackupHandler.saveMetadata(BackupHandler.java:442)

at com.code42.backup.handler.BackupHandler.executeSave(BackupHandler.java:227)

at com.code42.backup.save.BackupQueue.saveFile(BackupQueue.java:1328)

at com.code42.backup.save.BackupQueue.processTodo(BackupQueue.java:883)

at com.code42.backup.save.BackupQueue.addFileDirectly(BackupQueue.java:668)

at com.code42.backup.save.BackupQueue.addParentFileTodoIfNecessary(BackupQueue.java:1075)

at com.code42.backup.save.BackupQueue.processTodo(BackupQueue.java:816)

at com.code42.backup.save.BackupQueue.access$800(BackupQueue.java:97)

at com.code42.backup.save.BackupQueue$TodoWorker.doWork(BackupQueue.java:1797)

at com.code42.utils.AWorker.run(AWorker.java:149)

at java.lang.Thread.run(Thread.java:662)

So after installation, do I still need to keep User Home services enabled? I also see a new CrashPlan share. Is that created by the installation? What is it used for and can it be deleted?

After crashplan app updated itself after my first sign-in, I noticed I had to manually restart the app a few times as mentioned in the notes. Is there any way to automate these post-upgrade restarts so I don’t need to periodically check for this issue? I don’t want an automatic upgrade to suspend my backups without me knowing about it.

Something went wrong. New java update runs automatically after update and is showing “running” status. No option to stop. Crashplan will not start. Says “wait a few seconds and stop and restart the package to allow client connections” App will not start.

Oops! Fixed.

Thanks Patters. That did it.

Is there a path for reverting back to the previous version of crashplan? Thanks

Still cannot make the package work. Updated to DSM 4.2, uninstalled Patters Java, uninstalled Crashplan Pro, and then re-installed both, rebooting, and starting and re-starting CrashPlan service. Also tried same approach with the new version of Java that Synology provides. Getting error on OSX via the terminal session that I use to map the port “channel 3: open failed: administratively prohibited: open failed”

Is this a permissions issue, or something different?

Ignore. I determined what the issue was; it was related to the port-remapping of the headless client works. In case others run into this issue, here is what I found…

1. The package will work with the Synology version of Java, FWTW.

2. I was previously using “admin” on my SSH tunnel to the Synology. My issue here was an SSH error, not a package error. I looked in the ssh config file, and only “root” had access to re-map ports. Logged in as root across the terminal session, and all is good.

I dont know if something changed in the new DSM (4.2 Beta), but I know for a fact that I was using admin as the login on the ssh string.

Thanks.

More info for those who are running into issues. In addition to the logging in as root issue to get it going, I had to disable SSH on the DS1511, reboot and re-enable it. I am not sure what the issue is (could be user error), but if you are getting “open failed” messages from your terminal session, this might be a quick fix.

Also, verbose mode on the ssh command has been invaluable in tracking this stuff down.

“ssh -L 4200:localhost:4243 root@192.168.1.178 -v -4″

Hi Patters, I’ve upgraded to the .20 release after upgrading to DSM 4.2 and installing Perl, everything is working OK except that I cannot change the folders being backed up using the windows client. Whenever I click on the Change button on the Backup tab it opens the popup window Ok, but it hangs on with a message saying “loading”. Any ideas what’s missing ?

I am experiencing exactly the same issue (clicking Change button hangs on message “loading”).

This appears to be a general problem with Crashplan headless, as I am running it on other Linux machines (non-Synology) with exactly the same issue.

It used to work fine on the Synology, which was the first place I installed Crashplan headless, but alas no more. I have contacted Crashplan support but they have simply advised me that the headless configuration is unsupported and are not prepared to help at all (very poor customer service unfortunately).

The only thing I have been able to find on the web is that it may be due to an inconsistent client and server version of Crashplan, however they all appear to be running the same (latest) version.

There must be other people experiencing this issue too, any ideas anyone?

Pingback: Installer CrashPlan sur un serveur NAS Synology | Monde2Geeks

Is it normal to have a large number of java processes when running this package? I see on my Mac that I have just one java process running Crashplan, but on my SynoDS there are 67! Backup seems to be working fine but that does seem suspicious.

Not sure why this happens, but yes it is consistent on all Intel Synology products. It seems likely that they’re threads rather than full Java processes. From memory I think they have the same ID.

Patters – Upgraded to DSM4.2, uninstalled standalone java and installed the java manger package, am running the most recent CPPro package, and after the install went in and forced the java memory size to 1024MB (this is a 1010+ w/ 3GB ram.

At any rate, I can no longer get the backup to run. The service appears to keep running but the client (3.4.1), keeps crashing. Nothing in the syno package log and I even tried modifying the list of files to backup to force a new scan.

There is a total of 3.3TB slated for backup and am down to (after many months), to <200GB.

Any ideas?

Best,

Charlie-

Hi, quick question. Is there some way to make crashplan run as root on the NAS? The reason I’m asking is I have some files chmod’d 500 (like ssh-keys), and I just rsync them to the NAS, but now I’m finding that the NAS can’t back them up to crashplan because I think the “crashplan” user can’t access them. The simplest fix would be to just run the crashplan process as root. Could that work?

Hi…I have 2 questions: I set up your Crashplan package on my Sonology and was able to use my computer desktop to access the Sonology Crashplan engine by editing the servicehost entry to my Sonology’s IP address. 1) When I run the desktop program, it says that it is scanning the NAS, and I am not sure that any files were uploaded. I see the directory tree on my iPad app, but all of the directories are empty. The engine has been running about 1 day. Should I just be more patient? 2) Also, is it possible to the ui.properties file as an argument when running the desktop program so that writing a batch file I could either launch the desktop for my computer or for the NAS according to my needs. I tried some options like “CrashPlanDesktop.exe ui-NAS.properties” and could not get it to work. Thanks

Hello,

Thank you so much for putting together these instructions, and the packages!

I have installed everything on my Synology DS1512+, and to me, it looks like everything is working. I can connect to the headless client through the CrashPlan application on my machine, and I can select which folders I want to include in the backup, however, when I start the backup, it just sits saying:

“Waiting for connection”

I have tried re-starting the application/service, but it still comes back saying the same thing.

Do you have any clues as to what could be going on here?

Thanks!

Gary

Hi Patters, thanks for your efforts!!